I’ve built TQDBProxy to handle heavy read loads by caching SELECT queries with a TTL hint. The proxy worked well for reads. Each application server could keep a local cache and avoid trips to the database. The cache reduced pressure on primary databases and gave predictable latency under heavy read traffic.

But what if not the reads, but the writes are the bottleneck? Each small insert or update creates its own transaction and its own fsync on the database. The database CPU and I/O become saturated. Adding more machines does not help as you cannot easily shard hot tables over multiple database servers.

I think that adding a few milliseconds of latency to allow batching of operations would make sense in many scenarios. Batched operations allow for aggregating write operations and thus higher throughput. I implemented a hint based feature called “batch hints”. Clients can now ask the proxy to wait up to N milliseconds and combine identical write statements into a single batched execution.

Batch hint syntax

Clients add a batch:N comment to write statements. N is the maximum wait

time in milliseconds.

/* batch:10 */ INSERT INTO `logs` (level, message) VALUES (?, ?)

/* batch:50 */ UPDATE `settings` SET value = ? WHERE key = ?

/* batch:5 */ DELETE FROM `sessions` WHERE expired_at < ?

As you see it is as easy as adding a comment to lower the database load of some high frequent write queries.

Behavior and guarantees

- The hint only applies to INSERT, UPDATE and DELETE statements.

- Batch hints are ignored inside transactions (BEGIN/COMMIT).

- Queries are grouped by their query text (make use of parameters).

- The first request to a new group starts a timer for

Nmilliseconds. - Next requests join the group while the timer runs.

- The group executes on timer expiration or on maximum batch size.

- Each request receives its own result or error message.

Why this helps

Many workloads generate many similar writes. Logging, metrics ingestion and lightweight cache updates are examples. Each operation on its own causes an fsync and a protocol round trip. Combining many operations into one transaction reduces the number of fsyncs and reduces the CPU used for networking. The result is dramatically higher throughput.

In practice this means that the proxy has extra work to do, but as the proxy lives on the application servers and it still offloads the primary database. The system as a whole has become more scalable if you can offload the primary. This is true even though the whole system has become less efficient and the total CPU and memory needed to execute these write operations has increased.

Performance

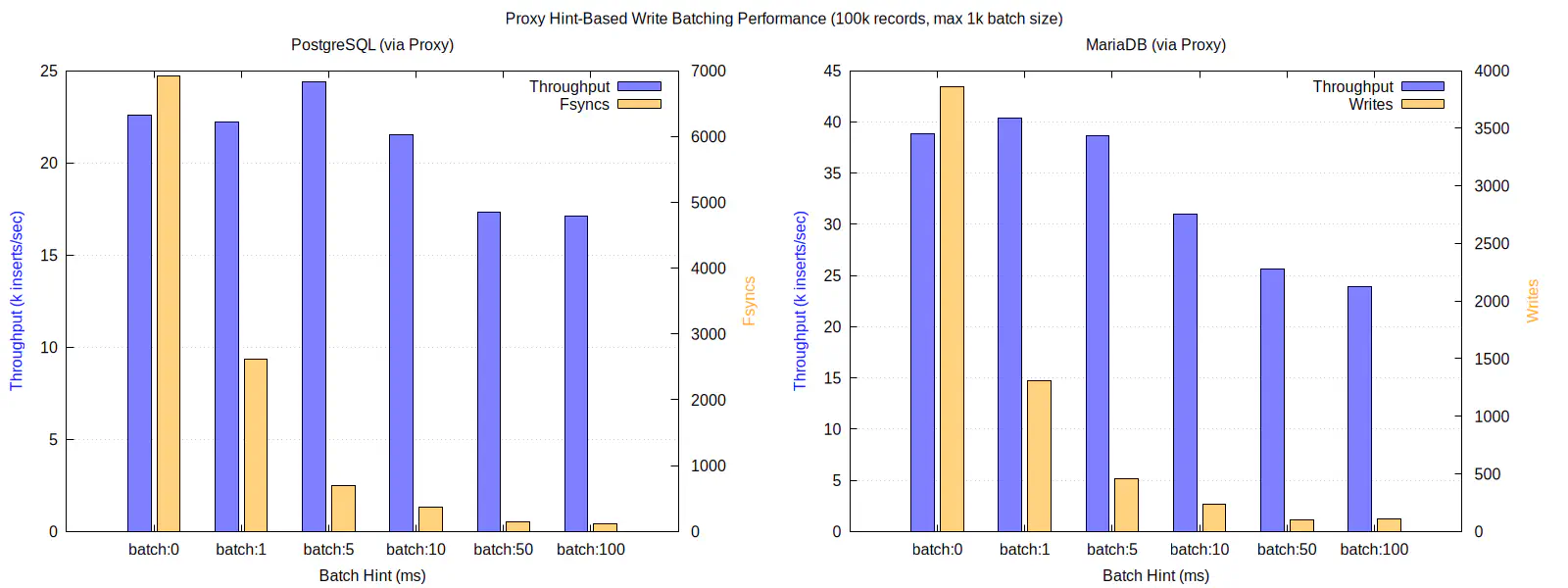

I’ve created several benchmarks proof the perfmance improvements. Under sustained

high write load the batch hints delivered a ten times less writes/fsyncs when

using a batch:10 window. The test delayed statements by at most 10 milliseconds.

The database handled the same number of rows per second with far fewer transactions.

The latency increase stayed predictable and bounded by the hint value.

Implementation notes

The write batch manager keeps a map of batch groups keyed by the normalized query. Each group holds queued requests and a timer. The manager enqueues requests and starts the timer on the first arrival. When the timer fires the manager builds a batched statement and executes it. The manager then splits the result and returns per request responses.

The manager enforces safety rules. It refuses to batch statements that are inside a transaction (to prevent nested transactions). It also respects a maximum batch size. The default maximum batch size is 1000 operations. The manager exposes metrics for batch sizes and delays so you can tune windows in production.

Usage patterns and recommendations

- Use

batch:1for low latency but still some batching. This is suitable for user facing updates where small delays are acceptable. - Use

batch:10for logging and event ingestion. This gives large throughput gains while keeping latency small. - Use

batch:100for background cleanup tasks or offline analytics ingestion where latency does not matter at all.

Conclusion

Batch hints let you trade a bounded amount of latency for a large gain in throughput. For many high write workloads a 10x improvement is realistic when using a 10 millisecond window. Batching reduces transaction count and I/O pressure. The feature is safe to use and easy to enable by adding a single comment to client queries.

See: https://github.com/mevdschee/tqdbproxy